Cloud native applications are defined as applications that are scalable and reliable by construction. The difference between cloud native applications and non-cloud native applications is that the non-cloud native applications are scalable by requirement and not by construct. Construct implies that the design of applications by default must take care of scalability and reliability. In any application, there are two major failures. Failures due to code and failures due to performance. Cloud native applications can detect run-time failures and take mitigations on its own. Cloud native applications are usually container packaged, micro services oriented and dynamically orchestrated.

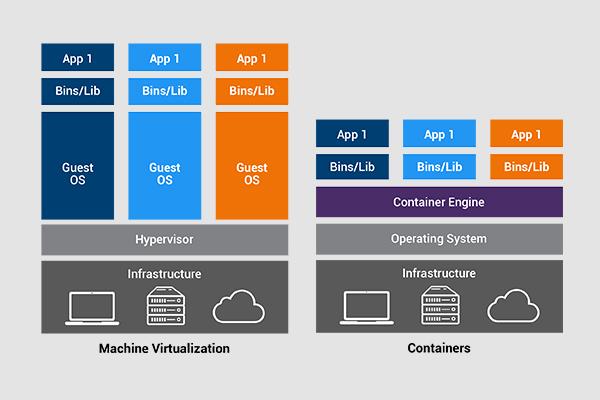

Application Containers: Technically the applications are container based which enables the deployment across different flavours of operating systems. The biggest benefits of containers are faster deployment, portability and cost efficient. Containers are just processes running in your system. Unlike a VM which provides hardware virtualization, a container provides operating-system-level virtualization by abstracting the “user space”. Containers require fewer system resources as they do not require any operating system images. Containers are good for modern application development say microservices etc.

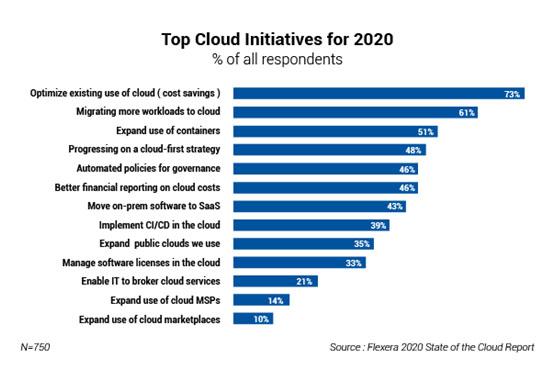

Container as a service use cases are picking up in the cloud and many cloud-based applications are built using container as a service. Monolithic applications are decoupled as microservices using the container images.

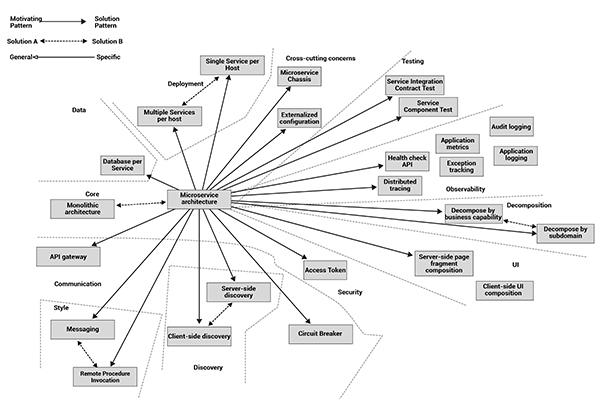

Microservices: Microservices is an architectural style where autonomous, independently deployable services collaborate to form a broader system/application. The benefits of microservices includes the following.

- Decompose applications into smaller services and each service can be owned by a smaller team.

- A good microservice is the one that can be thrown away if necessary and rewritten.

- Microservices offer up-gradation flexibility while one service could be in one version and the other service could be in a different version.

- Microservices are loosely coupled and scalable.

- Microservices improves the deployment frequency as the services are very light in nature and can be deployed very frequently.

Services Orchestration:

Orchestration is the process of getting all the (infrastructural) components lined up to deliver your digital service to your customers. All moving part in an IT environment are part of orchestration. From a code change to production, everything is orchestration. Orchestration is the heartbeat of your lifecycle of every iteration you made. There are 4 steps in the IT services part of orchestration.

- Provision the infrastructure.

- Code and Commit changes

- Build and test the service.

- Deploy and Run the services

Cloud native applications will help in all above the steps in an automated way.

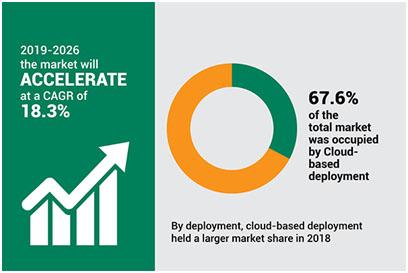

To conclude, cloud native application development approach is critical to the utilization of the cloud. Many legacy applications are getting migrated as cloud native application to enable the scalability and availability of the applications in general.

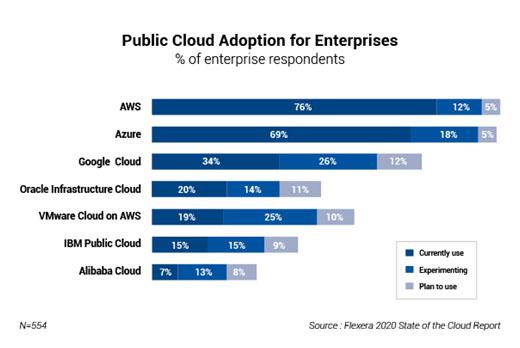

Cloud Costing Model: Awareness of the cloud costing model is a must. The number of products by a cloud services provider is daunting. As an example, AWS has 169 products whereas GCP has 90 products. Many costs are hidden in nature and many of them must be discovered on the way. Therefore, right experts are necessary to make sure the cloud costs are optimized to the best of the ability.

Cloud Costing Model: Awareness of the cloud costing model is a must. The number of products by a cloud services provider is daunting. As an example, AWS has 169 products whereas GCP has 90 products. Many costs are hidden in nature and many of them must be discovered on the way. Therefore, right experts are necessary to make sure the cloud costs are optimized to the best of the ability.