The basic ingredient required for AI is a successful machine learning model. However, to create and run the model it is important to have the right capacity of infrastructure, followed by good domain knowledge and a large amount of data. A machine learning model is defined as a software entity created with algorithms and training data. The success of the model depends on getting the right training data with precisely tweaked algorithms. Running the model requires huge infrastructure with GPU / TPU CPU capacity and this makes most of the enterprises wary of investing in AI. The capex model for AI infrastructure calls for a very large investment, plus with the technology developing at a fast pace, enterprises will find it difficult to keep changing the infrastructure.

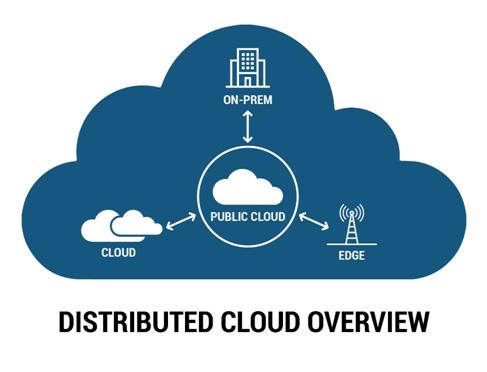

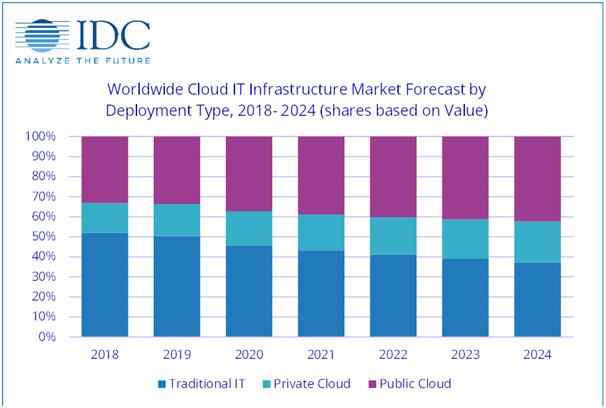

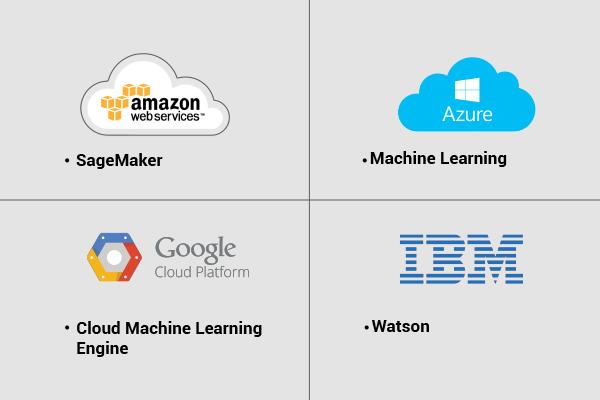

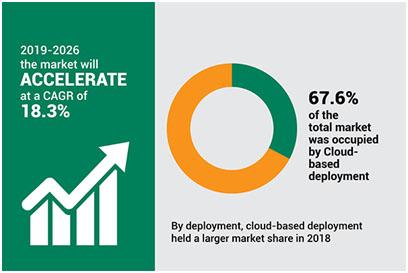

Essentially for Businesses to take advantage of AI, they must have a huge capacity investment. However, cloud comes to the rescue by offering infrastructure capacity with a lesser initial investment and pay as you use models. In the following blog let us look at the models offered by various cloud service providers for running machine learning as a service (MLaaS). We look at four major cloud service providers namely Amazon, Microsoft Azure, Google Cloud (GCP) and IBM Watson.

Amazon Machine Learning

SageMaker is an Amazon machine learning framework with built-in models with algorithms for classification, regression, multi-class classification, k-means clustering and so on. SageMaker helps to create the models quickly with advanced algorithms. Apart from this, Amazon also offers huge infrastructure on-demand as well as serverless-processing enabling the models to be run on the most optimized infrastructure. Amazon SageMaker also gives hook to Google Tools like Tensorflow, open-source Keras, Facebook Pytorch etc. A complete MLops (Equivalent of DevOps for Machine learning code) is offered by Amazon.

Azure Machine Learning Platform

Services from Azure Machine learning can be elaborated two-fold, Azure Machine Learning studio and Bot service. The graphical drag and drop machine language workflow creation ability is created by Azure using Azure ML Studio. This includes data-exploration, pre-processing, choosing methods, and validating modelling results.The main benefit of using Azure is the variety of algorithms available to play with. The Studio supports around 100 methods that address classification(binary multiclass), anomaly detection, regression, recommendation, and text analysis. It is worth mentioning that the platform has one clustering algorithm (K-means).

Azure serves different kinds of customers. Namely data Scientists, Data engineers, data analysts and so on. Azure’s approach is to provide an end-to-end platform for all types of customers and the product includes model management tools, python packages and workbench tools.

Google Machine Learning Services

Google being an AI-first company offers a variety of AI tools for the developers, enterprise operations, data scientists etc. Google recently started AutoML which requires no programming to develop a Machine Learning model. Google has been a great contributor to open-sources. Most recently they introduced Google BERT, TensorFlow, AutoML etc. Among all the service providers Google has done the maximum contribution to open-source and this, in turn, has improved the adoption of Google Tools. Today TensorFlow is the most widely used development tool amongst the developers. It has different libraries available from multiple open sources making it one of the more popular developing applications on the cloud. Google Cloud also offers a high-end computing environment with TPU processors along with robust data security making it one of the most versatile platforms for development and deployment. Many cloud-native deployments are possible in Google Cloud Platform.

IBM Watson

IBM Watson one of the earliest and very widely used machine learning platforms, has been in existence for some time. It offers a set of services for newcomers as well as experienced service providers. Separately, IBM offers deep neural network training workflow with flow editor interface similar to the one used in Azure ML Studio.

Machine Learning Services offered by the cloud providers.

- Speech and text service Translation service

- Image classification

- Text classification

- Speech classification

- Facial detection

- Facial analysis

- Celebrity recognition

- Written text recognition

- Video Analysis etc

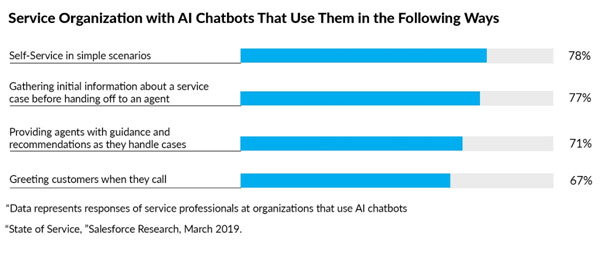

In conclusion, many of the cloud service providers have recognized the fact that business transformation can be brought about by the use of AI technology and provide machine learning as a service so that enterprises can use the readily available models. This helps them in the areas of prediction, personalization, natural language processing, optimization, and anomaly detection. Businesses want a competitive edge and AI plays a key role. And to enable AI quickly, cloud is the way to go.

Cloud Costing Model: Awareness of the cloud costing model is a must. The number of products by a cloud services provider is daunting. As an example, AWS has 169 products whereas GCP has 90 products. Many costs are hidden in nature and many of them must be discovered on the way. Therefore, right experts are necessary to make sure the cloud costs are optimized to the best of the ability.

Cloud Costing Model: Awareness of the cloud costing model is a must. The number of products by a cloud services provider is daunting. As an example, AWS has 169 products whereas GCP has 90 products. Many costs are hidden in nature and many of them must be discovered on the way. Therefore, right experts are necessary to make sure the cloud costs are optimized to the best of the ability.